Efficient training of CNNs and a pilot project for the Max78000

In this project we present new tools for the efficient generation of training and test data sets for CNNs and a real world pilot project for the Max78000. The pilot project aims at the detection of Red Palm Weevil sounds, a dangerous insect pest.

Please read the attached PDF for description with pictures.

Project outline, June 30th 2021

by Sebastian Huebner PhD

Email: sebastian@sejona.de

Teaching is the new programming

Introduction

Many people are in need of smart devices that are able to solve highly specific non-speech audio pattern recognition (APR) tasks.

Applications can be found in countless areas in the industry (e.g. acoustic monitoring of high pressure valves), in agriculture (e.g. acoustic pest control), in nature conservation (e.g. acoustic detection of invasive species), geology (e.g. earthquake monitoring) or in noise control (e.g. monitoring of railroad noises).

Millions of little sounds occur almost everywhere: squeaking, whistling, creaking, cracking, rumbling, roaring, swinging, smacking, rubbing, rattling, munching, barking, growling, singing, hissing, grinding, popping, rattling, trembling etc. Such sounds can provide useful information about the state of machines, plants, animals, ecosystems or even the ground we stand on.

In many APR scenarios bulky PC hardware proved to be not very useful. Cost is a major issue here, but also size and power consumption. Customers are much more likely to want cheap, small, and easy to use APR devices running at the edge.

This is where the Max78000 comes in right. The convolutional neural network (CNN) accelerator relieves the CPU so that many APR tasks can be solved quickly. Also the chip does not consume much power, so that acoustic processes can be monitored over long periods of time in battery mode.

The CNN training and test data set problem

Even the best CNN is useless unless it is trained properly. Training and test of CNNs requires a lot of audio samples. To make an application work, it is vital to have samples be selected and extracted automatically from recordings (unless you want to select thousands of audio snippets by hand). Note that ready to use data sets are available only in a few cases.

Generation of proper training and test (TT) samples can be a hard problem. In fact, it is one of the biggest obstacles on the way to new viable CNN based APR applications. Working time is a major issue here, but also lack of proper tools and missing knowledge about the given acoustic processes.

To get the job done, an APR software is needed that automatically selects and extracts well formed audio samples from raw data. It is not required that such a software runs on a small computer. But it can’t be CNN based as training this would require exactly the TT data set that is not yet available. So, a more suitable approach is needed. And this is where this project comes in right.

Approach

Non speech APR is not a trivial task. The nature of the underlying acoustic processes, the acoustic environment, feature extraction methods and the audio hardware are all deeply intertwingled:

To address these problems the author created a software called Sound Recognition Suite (SRSuite). (Initially, it was called DSProlog and developed for a PHD thesis (2007) on knowledge based audio signal classification with applications in bioacoustics.)

SRSuite is based on the idea of getting a better grip on design, test and implementation of basic audio signal classifiers (ASC) through a visual-interactive modeling and knowledge discovery process. Actually, SRSuite is a visual-interactive knowledge discovery support environment (KDSE) for basic ASC design. A free test version of SRSuite can be downloaded from the author’s website.

SRSuite does not use CNNs to classify signals. Instead, ASCs are based on signatures of acoustic processes, similarity measures, thresholds and logical operators. This architecture keeps the ASC modelling process transparent to the human user and covers a great range of basic signal classification tasks. Also signature based ASCs do not necessarily require big TT data sets in order to be functional.

The idea of this project is to marry a modified version of SRSuite called Neural Network Teacher (NNTeacher) into the Max78000 development flow (see Figure 1).

An experimental version of NNTeacher can be downloaded from the author’s website here.

In doing so it should be possible to solve the TT data set problem and create new robust APR solutions within hours. The accuracy of the CNN may be improved and development costs be reduced. Thus we might be able to reach the many customers that are still waiting for APR on the edge.

This marriage is almost a perfect match because NNTeacher has already many tools to generate TT datasets with ease. The supposed place of NNTeacher in the development flow for the Max78000 is shown in Figure 1.

Figure 1: Extended development flow of the MAX78000. (Original diagram by Maxim, NNTeacher-box added by the author)

- Pictures can be seen only in the attached PDF of this project outline

Ideally, we will reach a point when it will become possible to train a Max78000 based APR board within a few steps:

This simple procedure needs to be supervised which should become possible in NNTeacher soon. A trained CNN, while from the inside very different, behaves very similar to a signature based classifier, i.e. it receives input data and outputs a real confidence value between 0 and 1. So it is possible to see how it reacts to audio data and to fine tune various parameters.

Example: Red Palm Weevil Detection

In many Arab countries the date palm industry is a major economic factor. In recent years, millions of palm trees were destroyed by the Red Palm Weevil (RPW). The insect’s larvae eat the palm from the inside. One single healthy tree can be worth several thousand US dollars. The earlier the infestation is detected the better the chances for healing. From the outside nothing can be seen in the initial phase of infestation. Once the infestation can be seen with the naked eye the tree is virtually dead.

Scientific research shows that structure-borne sounds caused by RPW larvae can be identified automatically. Identification of these sounds is tricky because of many noises that usually occur in the audio data. The APR engine needs to be very precise (which is computationally expensive) and must be adjusted to given environments (e.g. to traffic noises) otherwise results will be unsatisfactory.

So why not attach to date palms smart APR devices that are able to timely recognize when a tree becomes infested? Indeed, this would be a wonderful application for a well educated Max78000 board.

The author (having been involved research on RPW bioacoustics previously) compiled a sample project for NNTeacher in order to demonstrate how easy it is to get a TT data set from a few recordings.

The RPW demo can be downloaded from the author’s website here

The demo contains:

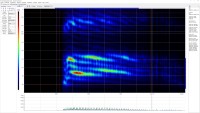

Figure 2: A few seconds of annotated audio data in spectrogram view. RPW cracks are marked with red crosses.The red, green and blue curves below show the response of Cinitialto the audio data.

- Pictures can be seen only in the attached PDF of this project outline

Figure 3: Spectrograms of a few files containing single cracks from the TT data set. Each file is 27.17 ms long.

It is obvious that the generated TT data set is large enough to train a CNN and far to large to be selected manually from the AFC.

Currently the author works on training a CNN with this TT data set and porting it to the Max78000FTHR to check how it performs. This, however, is still quite complicated and the author didn’t achive this goal by the end of the deadline of this competition.

Please feel free to install NNTeacher and download the RPW demo project. Double click the file “RPW demo.srp” to open the demo. Set the project’s audio path to the “Waves/” subdirectory under the project path. Browse the files by double-clicking them and have a look at the classifiers and annotations. Please use SRSuite help if you are unfamiliar with the software and/or the terminology.

Future Project Tasks

Main goal of this project is an improved software framework for TT data set generation and supervised training of CNNs. With this framework it should become possible to generate APR solutions based on the Max78000 with more ease. A real world pilot project on RPW detection with the Max78000FTHR is also being considered.

In the future, the following work packages will have to be mastered:

This project is mid- to long-term. The author strongly appreciates partnerships with both hardware and software manufactures to make it become a reality.

Email of the author: sebastian@sejona.de

Project outline, June 30th 2021

by Sebastian Huebner PhD

Email: sebastian@sejona.de

Teaching is the new programming

Introduction

Many people are in need of smart devices that are able to solve highly specific non-speech audio pattern recognition (APR) tasks.

Applications can be found in countless areas in the industry (e.g. acoustic monitoring of high pressure valves), in agriculture (e.g. acoustic pest control), in nature conservation (e.g. acoustic detection of invasive species), geology (e.g. earthquake monitoring) or in noise control (e.g. monitoring of railroad noises).

Millions of little sounds occur almost everywhere: squeaking, whistling, creaking, cracking, rumbling, roaring, swinging, smacking, rubbing, rattling, munching, barking, growling, singing, hissing, grinding, popping, rattling, trembling etc. Such sounds can provide useful information about the state of machines, plants, animals, ecosystems or even the ground we stand on.

In many APR scenarios bulky PC hardware proved to be not very useful. Cost is a major issue here, but also size and power consumption. Customers are much more likely to want cheap, small, and easy to use APR devices running at the edge.

This is where the Max78000 comes in right. The convolutional neural network (CNN) accelerator relieves the CPU so that many APR tasks can be solved quickly. Also the chip does not consume much power, so that acoustic processes can be monitored over long periods of time in battery mode.

The CNN training and test data set problem

Even the best CNN is useless unless it is trained properly. Training and test of CNNs requires a lot of audio samples. To make an application work, it is vital to have samples be selected and extracted automatically from recordings (unless you want to select thousands of audio snippets by hand). Note that ready to use data sets are available only in a few cases.

Generation of proper training and test (TT) samples can be a hard problem. In fact, it is one of the biggest obstacles on the way to new viable CNN based APR applications. Working time is a major issue here, but also lack of proper tools and missing knowledge about the given acoustic processes.

To get the job done, an APR software is needed that automatically selects and extracts well formed audio samples from raw data. It is not required that such a software runs on a small computer. But it can’t be CNN based as training this would require exactly the TT data set that is not yet available. So, a more suitable approach is needed. And this is where this project comes in right.

Approach

Non speech APR is not a trivial task. The nature of the underlying acoustic processes, the acoustic environment, feature extraction methods and the audio hardware are all deeply intertwingled:

- The nature of the acoustic processes determines which features can be used for classification.

- Various types of noise and background sounds need to be taken into account.

- Microphones, wires, amplifiers and AD-converters have an influence on the frequency response curve of the recording system. This curve has an influence on frequency related features used for APR.

- Frequently, in the beginning phase of APR solution development it is neither obvious nor understood how certain types of audio signals can be classified correctly.

To address these problems the author created a software called Sound Recognition Suite (SRSuite). (Initially, it was called DSProlog and developed for a PHD thesis (2007) on knowledge based audio signal classification with applications in bioacoustics.)

SRSuite is based on the idea of getting a better grip on design, test and implementation of basic audio signal classifiers (ASC) through a visual-interactive modeling and knowledge discovery process. Actually, SRSuite is a visual-interactive knowledge discovery support environment (KDSE) for basic ASC design. A free test version of SRSuite can be downloaded from the author’s website.

SRSuite does not use CNNs to classify signals. Instead, ASCs are based on signatures of acoustic processes, similarity measures, thresholds and logical operators. This architecture keeps the ASC modelling process transparent to the human user and covers a great range of basic signal classification tasks. Also signature based ASCs do not necessarily require big TT data sets in order to be functional.

The idea of this project is to marry a modified version of SRSuite called Neural Network Teacher (NNTeacher) into the Max78000 development flow (see Figure 1).

An experimental version of NNTeacher can be downloaded from the author’s website here.

In doing so it should be possible to solve the TT data set problem and create new robust APR solutions within hours. The accuracy of the CNN may be improved and development costs be reduced. Thus we might be able to reach the many customers that are still waiting for APR on the edge.

This marriage is almost a perfect match because NNTeacher has already many tools to generate TT datasets with ease. The supposed place of NNTeacher in the development flow for the Max78000 is shown in Figure 1.

Figure 1: Extended development flow of the MAX78000. (Original diagram by Maxim, NNTeacher-box added by the author)

- Pictures can be seen only in the attached PDF of this project outline

Ideally, we will reach a point when it will become possible to train a Max78000 based APR board within a few steps:

- Create sample recordings with the APR board and the microphone that will ultimately be used (see point C. on previous page). Collect the recordings in an audio file collection (AFC) large enough to extract all necessary TT data.

- Explore the AFC with NNTeacher in order to understand the nature of the acoustic signals that need to be classified (see point A. and D. on previous page).

- Estimate the degree of hardness of the given APR task and create a set of classifiers Cinitial in NNTeacher suitable to select TT samples from the AFC.

- Use Cinitial to automatically create a TT data set. Refine Cinitial if necessary and iterate this step unless the desired TT data set is ready.

- Select or create in Pytorch an untrained CNN that approximately matches the hardness of the APR task.

- Train and optimize the CNN / if the CNN does not work as expected go back to step 5

- Write the optimized CNN to the Max78000 APR board. Run a final test – Done!

This simple procedure needs to be supervised which should become possible in NNTeacher soon. A trained CNN, while from the inside very different, behaves very similar to a signature based classifier, i.e. it receives input data and outputs a real confidence value between 0 and 1. So it is possible to see how it reacts to audio data and to fine tune various parameters.

Example: Red Palm Weevil Detection

In many Arab countries the date palm industry is a major economic factor. In recent years, millions of palm trees were destroyed by the Red Palm Weevil (RPW). The insect’s larvae eat the palm from the inside. One single healthy tree can be worth several thousand US dollars. The earlier the infestation is detected the better the chances for healing. From the outside nothing can be seen in the initial phase of infestation. Once the infestation can be seen with the naked eye the tree is virtually dead.

Scientific research shows that structure-borne sounds caused by RPW larvae can be identified automatically. Identification of these sounds is tricky because of many noises that usually occur in the audio data. The APR engine needs to be very precise (which is computationally expensive) and must be adjusted to given environments (e.g. to traffic noises) otherwise results will be unsatisfactory.

So why not attach to date palms smart APR devices that are able to timely recognize when a tree becomes infested? Indeed, this would be a wonderful application for a well educated Max78000 board.

The author (having been involved research on RPW bioacoustics previously) compiled a sample project for NNTeacher in order to demonstrate how easy it is to get a TT data set from a few recordings.

The RPW demo can be downloaded from the author’s website here

The demo contains:

- An AFC with 4 files recorded on 4 different infested trees. Each recording is 10 min long. The data contains thousands of structure-borne RPW crack sounds (see Figure 2). The cracks result from cellulose fibers being torn apart by feeding larvae.

- An optimized signature based terminal classifier Cinitialfor typical crack sounds

- A data set in 4 tables containing 5210 annotations of RPW crack sounds created by Cinitial.

- A TT data set with a total of 5210 standardized, 27.17 ms long audio files extracted from the annotated AFC (see Figure 3).

Figure 2: A few seconds of annotated audio data in spectrogram view. RPW cracks are marked with red crosses.The red, green and blue curves below show the response of Cinitialto the audio data.

- Pictures can be seen only in the attached PDF of this project outline

Figure 3: Spectrograms of a few files containing single cracks from the TT data set. Each file is 27.17 ms long.

It is obvious that the generated TT data set is large enough to train a CNN and far to large to be selected manually from the AFC.

Currently the author works on training a CNN with this TT data set and porting it to the Max78000FTHR to check how it performs. This, however, is still quite complicated and the author didn’t achive this goal by the end of the deadline of this competition.

Please feel free to install NNTeacher and download the RPW demo project. Double click the file “RPW demo.srp” to open the demo. Set the project’s audio path to the “Waves/” subdirectory under the project path. Browse the files by double-clicking them and have a look at the classifiers and annotations. Please use SRSuite help if you are unfamiliar with the software and/or the terminology.

Future Project Tasks

Main goal of this project is an improved software framework for TT data set generation and supervised training of CNNs. With this framework it should become possible to generate APR solutions based on the Max78000 with more ease. A real world pilot project on RPW detection with the Max78000FTHR is also being considered.

In the future, the following work packages will have to be mastered:

- Develop missing software components and interfaces to make the TT data set generation process easier and more efficient. The software framework should also allow interactive supervision of the CNN training process.

- Creation of a set of standardized untrained CNN models that suit the 95% percent of typical non-speech APR tasks. With such generalized models at hand, it might be possible to speed up APR development even more.

- Conduction of a pilot APR project using the Max78000FTHR hardware: Estimation of the performance of the Max78000 in real world RPW detection (see sample project above). The task was chosen because the RPW scenario has high economic potential and should not be to difficult for the chip.

- If all works out well, it might be considered to built an improved APR board based on the Max78000. It should be similar to the Max78000FTHR but with a few components removed to make it even smaller.

This project is mid- to long-term. The author strongly appreciates partnerships with both hardware and software manufactures to make it become a reality.

Email of the author: sebastian@sejona.de

Discussion (0 commentaire(s))